Entities

View all entitiesRisk Subdomain

1.2. Exposure to toxic content

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

A TikTok spokesperson didn't answer questions from BuzzFeed News about how the company polices videos on the platform, but reaffirmed its commitment to getting rid of them.

The app's "Need Help?" page provides contacts for the United States…

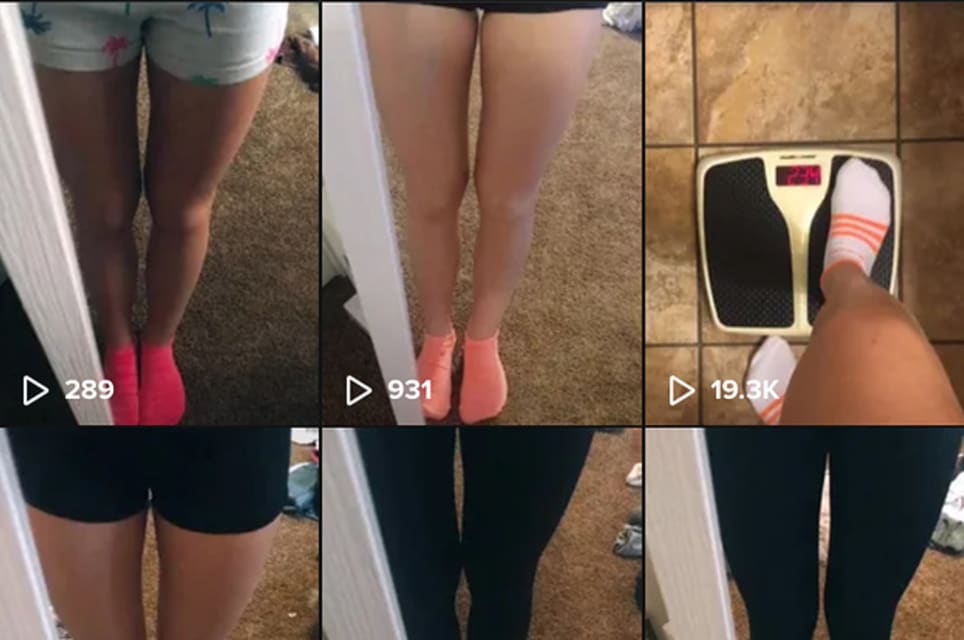

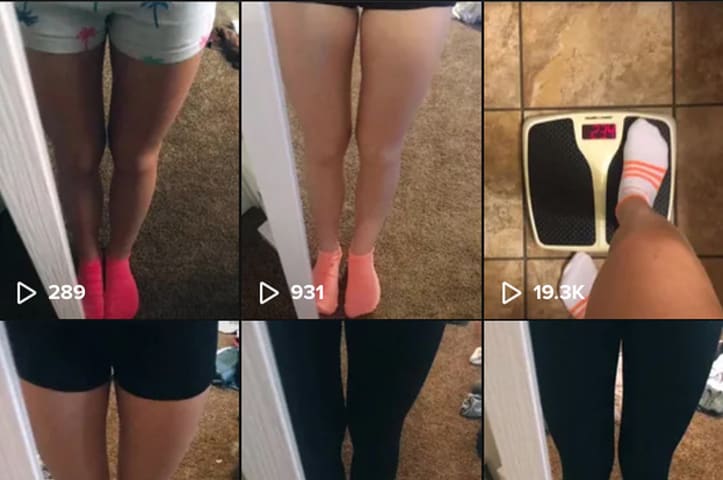

TikTok's algorithm is almost too good at suggesting relatable content — to the point of being detrimental for some users' mental health.

It's nearly impossible to avoid triggering content on TikTok, and because of the nature of the app's ne…

TikTok’s recommendation algorithm pushes self-harm and eating disorder content to teenagers within minutes of them expressing interest in the topics, research suggests.

The Center for Countering Digital Hate (CCDH) found that the video-shar…

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Biased Sentiment Analysis

Similar Incidents

Did our AI mess up? Flag the unrelated incidents