Entities

View all entitiesRisk Subdomain

1.1. Unfair discrimination and misrepresentation

Risk Domain

- Discrimination and Toxicity

Entity

AI

Timing

Post-deployment

Intent

Unintentional

Incident Reports

Reports Timeline

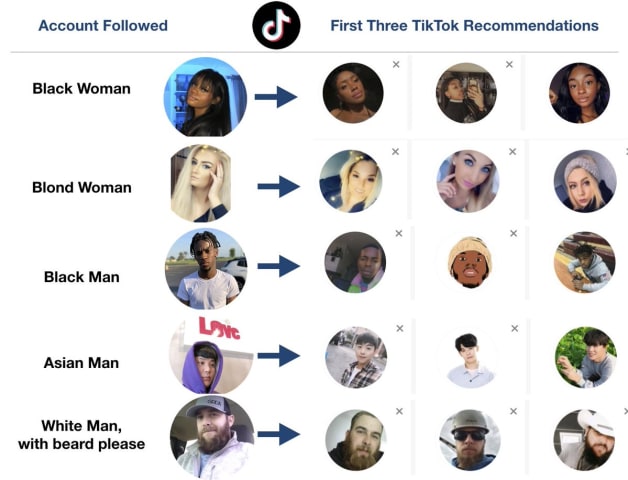

When artificial intelligence researcher Marc Faddoul joined TikTok a few days ago, he saw something concerning: When he followed a new account, the profiles recommended by TikTok seemed eerily, physically similar to the profile picture of t…

A TikTok novelty: FACE-BASED FITLER BUBBLES

The AI-bias techlash seems to have had no impact on newer platforms. Follow a random profile, and TikTok will only recommend people who look almost the same.

Let’s do the experiment from a fresh a…

According to an experiment performed by artificial intelligence researcher Marc Faddoul, the algorithm TikTok uses to suggest new users to follow might have a racial bias.

Faddoul, an AI researcher from the University of California, Berkele…

A little experiment by an artificial intelligence researcher is raising questions about how TikTok's recommendation algorithm suggests new creators to users.

Specifically, the question is whether that algorithm is sorting suggestions based …

Variants

Similar Incidents

Did our AI mess up? Flag the unrelated incidents

Similar Incidents

Did our AI mess up? Flag the unrelated incidents